MIT Expertise Overview received to check out Astra in a closed-door dwell demo final week. It was a shocking expertise, however there’s a gulf between polished promo and dwell demo.

Astra makes use of Gemini 2.0’s built-in agent framework to reply questions and perform duties through textual content, speech, picture, and video, calling up current Google apps like Search, Maps, and Lens when it must. “It’s merging collectively a number of the strongest info retrieval techniques of our time,” says Bibo Xu, product supervisor for Astra.

Gemini 2.0 and Astra are joined by Mariner, a brand new agent constructed on prime of Gemini that may browse the net for you; Jules, a brand new Gemini-powered coding assistant; and Gemini for Video games, an experimental assistant you could chat to and ask for suggestions as you play video video games.

(And let’s not overlook that within the final week Google DeepMind additionally introduced Veo, a brand new video technology mannequin; Imagen 3, a brand new model of its picture technology mannequin; and Willow, a brand new sort of chip for quantum computer systems. Whew. In the meantime, CEO Demis Hassabis was in Sweden yesterday receiving his Nobel Prize.)

Google DeepMind claims that Gemini 2.0 is twice as quick because the earlier model, Gemini 1.5, and outperforms it on various customary benchmarks, together with MMLU-Professional, a big set of multiple-choice questions designed to check the talents of enormous language fashions throughout a spread of topics, from math and physics to well being, psychology, and philosophy.

However the margins between top-end fashions like Gemini 2.0 and people from rival labs like OpenAI and Anthropic at the moment are slim. Nowadays, advances in giant language fashions are much less about how good they’re and extra about what you are able to do with them.

And that’s the place brokers are available.

Fingers on with Undertaking Astra

Final week I used to be taken by way of an unmarked door on an higher flooring of a constructing in London’s King’s Cross district right into a room with robust secret-project vibes. The phrase “ASTRA” was emblazoned in big letters throughout one wall. Xu’s canine, Charlie, the mission’s de facto mascot, roamed between desks the place researchers and engineers have been busy constructing a product that Google is betting its future on.

“The pitch to my mum is that we’re constructing an AI that has eyes, ears, and a voice. It may be wherever with you, and it may possibly provide help to with something you’re doing” says Greg Wayne, co-lead of the Astra workforce. “It’s not there but, however that’s the sort of imaginative and prescient.”

The official time period for what Xu, Wayne, and their colleagues are constructing is “common assistant.” They’re nonetheless determining precisely what meaning.

At one finish of the Astra room have been two stage units that the workforce makes use of for demonstrations: a drinks bar and a mocked-up artwork gallery. Xu took me to the bar first. “A very long time in the past we employed a cocktail skilled and we received them to instruct us to make cocktails,” stated Praveen Srinivasan, one other co-lead. “We recorded these conversations and used that to coach our preliminary mannequin.”

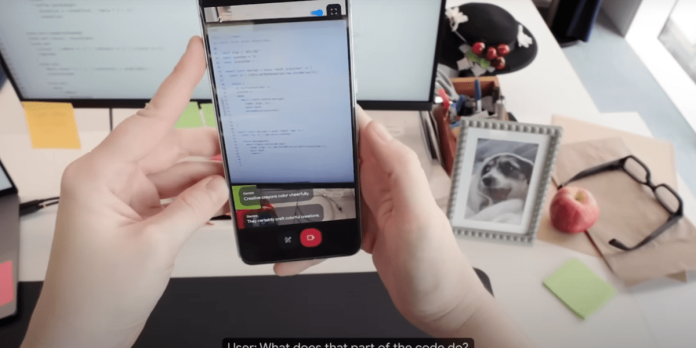

Xu opened a cookbook to a recipe for a hen curry, pointed her cellphone at it, and awakened Astra. “Ni hao, Bibo!” stated a feminine voice.

“Oh! Why are you chatting with me in Mandarin?” Xu requested her cellphone. “Are you able to communicate to me in English, please?”

“My apologies, Bibo. I used to be following a earlier instruction to talk in Mandarin. I’ll now communicate in English as you have got requested.”

Astra remembers earlier conversations, Xu advised me. It additionally retains observe of the earlier 10 minutes of video. (There’s a exceptional second within the promo video that Google put out in Might when Astra tells the individual giving the demo the place she had left her glasses, having noticed them on a desk just a few seconds earlier. However I noticed nothing like this within the dwell demo.)

Again to the cookbook. Transferring her cellphone digicam over the web page for just a few seconds, Xu requested Astra to learn the recipe and inform her what spices have been in it. “I recall the recipe mentioning a teaspoon of black peppercorns, a teaspoon of scorching chili powder, and a cinnamon stick,” it replied.

“I believe you’re lacking just a few,” stated Xu. “Take one other look.”

“You’re right—I apologize. I additionally see floor turmeric and curry leaves within the components.”

Seeing this tech in motion, two issues hit you immediately. First, it’s glitchy and sometimes wants correcting. Second, these glitches may be corrected with just some spoken phrases. You merely interrupt the voice, repeat your directions, and transfer on. It feels extra like teaching a baby than butting heads with damaged software program.

Subsequent Xu pointed her cellphone at a row of wine bottles and requested Astra to choose the one that might go greatest with the hen curry. It went for a rioja and defined why. Xu requested how a lot a bottle would value. Astra stated it could want to make use of Search to look costs up on-line. A number of seconds later it got here again with its reply.

We moved to the artwork gallery, and Xu confirmed Astra various screens with well-known work on them: the Mona Lisa, Munch’s The Scream, a Vermeer, a Seurat, and a number of other others. “Ni hao, Bibo!” the voice stated.

“You’re chatting with me in Mandarin once more,” Xu stated. “Attempt to communicate to me in English, please.”

“My apologies, I appear to have misunderstood. Sure, I’ll reply in English.” (I ought to know higher, however I might swear I heard the snark.)

It was my flip. Xu handed me her cellphone.

I attempted to journey Astra up, however it was having none of it. I requested it what well-known artwork gallery we have been in, however it refused to hazard a guess. I requested why it had recognized the work as replicas and it began to apologize for its mistake (Astra apologizes quite a bit). I used to be compelled to interrupt: “No, no—you’re proper, it’s not a mistake. You’re right to determine work on screens as pretend work.” I couldn’t assist feeling a bit dangerous: I’d confused an app that exists solely to please.

When it really works properly, Astra is enthralling. The expertise of putting up a dialog along with your cellphone about no matter you’re pointing it at feels contemporary and seamless. In a media briefing yesterday, Google DeepMind shared a video displaying off different makes use of: studying an e mail in your cellphone’s display to discover a door code (after which reminding you of that code later), pointing a cellphone at a passing bus and asking the place it goes, quizzing it a couple of public paintings as you stroll previous. This could possibly be generative AI’s killer app.

And but there’s a protracted strategy to go earlier than most individuals get their arms on tech like this. There’s no point out of a launch date. Google DeepMind has additionally shared movies of Astra engaged on a pair of sensible glasses, however that tech is even additional down the corporate’s want checklist.

Mixing it up

For now, researchers exterior Google DeepMind are maintaining an in depth eye on its progress. “The best way that issues are being mixed is spectacular,” says Maria Liakata, who works on giant language fashions at Queen Mary College of London and the Alan Turing Institute. “It’s onerous sufficient to do reasoning with language, however right here it’s worthwhile to herald pictures and extra. That’s not trivial.”

Liakata can also be impressed by Astra’s skill to recall issues it has seen or heard. She works on what she calls long-range context, getting fashions to maintain observe of data that they’ve come throughout earlier than. “That is thrilling,” says Liakata. “Even doing it in a single modality is thrilling.”

However she admits that a variety of her evaluation is guesswork. “Multimodal reasoning is actually cutting-edge,” she says. “Nevertheless it’s very onerous to know precisely the place they’re at, as a result of they haven’t stated quite a bit about what’s within the expertise itself.”

For Bodhisattwa Majumder, a researcher who works on multimodal fashions and brokers on the Allen Institute for AI, that’s a key concern. “We completely don’t understand how Google is doing it,” he says.

He notes that if Google have been to be somewhat extra open about what it’s constructing, it could assist customers perceive the constraints of the tech they may quickly be holding of their arms. “They should understand how these techniques work,” he says. “You need a person to have the ability to see what the system has discovered about you, to right errors, or to take away belongings you need to maintain personal.”

Liakata can also be anxious in regards to the implications for privateness, declaring that folks could possibly be monitored with out their consent. “I believe there are issues I am enthusiastic about and issues that I am involved about,” she says. “There’s one thing about your cellphone turning into your eyes—there’s one thing unnerving about it.”

“The impression these merchandise could have on society is so large that it ought to be taken extra critically,” she says. “Nevertheless it’s grow to be a race between the businesses. It’s problematic, particularly since we don’t have any settlement on methods to consider this expertise.”

Google DeepMind says it takes a protracted, onerous have a look at privateness, safety, and security for all its new merchandise. Its tech might be examined by groups of trusted customers for months earlier than it hits the general public. “Clearly, we’ve received to consider misuse. We’ve received to consider, you already know, what occurs when issues go improper,” says Daybreak Bloxwich, director of accountable improvement and innovation on the firm. “There’s big potential. The productiveness good points are big. However it is usually dangerous.”

No workforce of testers can anticipate all of the ways in which individuals will use and misuse new expertise. So what’s the plan for when the inevitable occurs? Firms have to design merchandise that may be recalled or switched off simply in case, says Bloxwich: “If we have to make modifications rapidly or pull one thing again, then we will do this.”