AI fashions work by coaching on big swaths of information from the web. However as AI is more and more getting used to pump out internet pages full of junk content material, that course of is in peril of being undermined.

New analysis revealed in Nature exhibits that the standard of the mannequin’s output step by step degrades when AI trains on AI-generated knowledge. As subsequent fashions produce output that’s then used as coaching knowledge for future fashions, the impact will get worse.

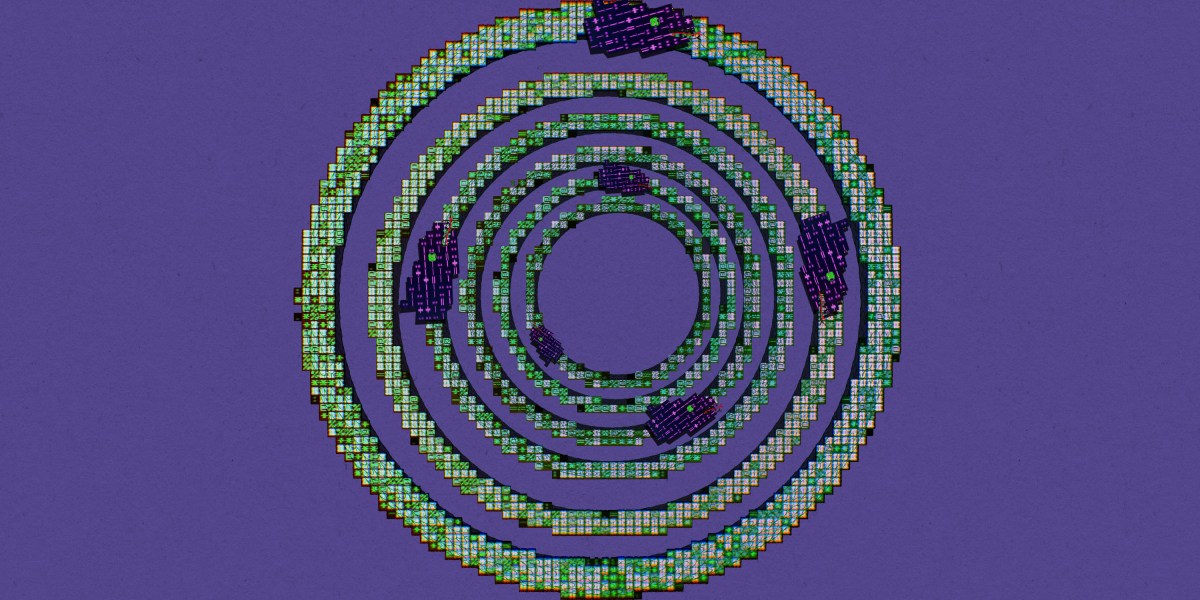

Ilia Shumailov, a pc scientist from the College of Oxford, who led the examine, likens the method to taking images of images. “In case you take an image and also you scan it, and you then print it, and also you repeat this course of over time, mainly the noise overwhelms the entire course of,” he says. “You’re left with a darkish sq..” The equal of the darkish sq. for AI is known as “mannequin collapse,” he says, that means the mannequin simply produces incoherent rubbish.

This analysis might have critical implications for the most important AI fashions of at this time, as a result of they use the web as their database. GPT-3, for instance, was educated partially on knowledge from Frequent Crawl, a web based repository of over 3 billion internet pages. And the issue is more likely to worsen as an rising variety of AI-generated junk web sites begin cluttering up the web.

Present AI fashions aren’t simply going to break down, says Shumailov, however there should still be substantive results: The enhancements will decelerate, and efficiency would possibly endure.

To find out the potential impact on efficiency, Shumailov and his colleagues fine-tuned a big language mannequin (LLM) on a set of information from Wikipedia, then fine-tuned the brand new mannequin by itself output over 9 generations. The workforce measured how nonsensical the output was utilizing a “perplexity rating,” which measures an AI mannequin’s confidence in its capacity to foretell the subsequent a part of a sequence; the next rating interprets to a much less correct mannequin.

The fashions educated on different fashions’ outputs had greater perplexity scores. For instance, for every era, the workforce requested the mannequin for the subsequent sentence after the next enter:

“some began earlier than 1360—was usually completed by a grasp mason and a small workforce of itinerant masons, supplemented by native parish labourers, in accordance with Poyntz Wright. However different authors reject this mannequin, suggesting as an alternative that main architects designed the parish church towers primarily based on early examples of Perpendicular.”

On the ninth and last era, the mannequin returned the next:

“structure. Along with being house to among the world’s largest populations of black @-@ tailed jackrabbits, white @-@ tailed jackrabbits, blue @-@ tailed jackrabbits, pink @-@ tailed jackrabbits, yellow @-.”

Shumailov explains what he thinks is happening utilizing this analogy: Think about you’re looking for the least seemingly title of a pupil in class. You can undergo each pupil title, however it could take too lengthy. As a substitute, you have a look at 100 of the 1,000 pupil names. You get a fairly good estimate, however it’s in all probability not the proper reply. Now think about that one other particular person comes and makes an estimate primarily based in your 100 names, however solely selects 50. This second particular person’s estimate goes to be even additional off.

“You may actually think about that the identical occurs with machine studying fashions,” he says. “So if the primary mannequin has seen half of the web, then maybe the second mannequin just isn’t going to ask for half of the web, however truly scrape the newest 100,000 tweets, and match the mannequin on high of it.”

Moreover, the web doesn’t maintain an infinite quantity of information. To feed their urge for food for extra, future AI fashions might have to coach on artificial knowledge—or knowledge that has been produced by AI.

“Basis fashions actually depend on the size of information to carry out properly,” says Shayne Longpre, who research how LLMs are educated on the MIT Media Lab, and who did not participate on this analysis. “They usually’re trying to artificial knowledge below curated, managed environments to be the answer to that. As a result of in the event that they hold crawling extra knowledge on the net, there are going to be diminishing returns.”

Matthias Gerstgrasser, an AI researcher at Stanford who authored a distinct paper inspecting mannequin collapse, says including artificial knowledge to real-world knowledge as an alternative of changing it doesn’t trigger any main points. However he provides: “One conclusion all of the mannequin collapse literature agrees on is that high-quality and numerous coaching knowledge is necessary.”

One other impact of this degradation over time is that data that impacts minority teams is closely distorted within the mannequin, because it tends to overfocus on samples which might be extra prevalent within the coaching knowledge.

In present fashions, this may occasionally have an effect on underrepresented languages as they require extra artificial (AI-generated) knowledge units, says Robert Mahari, who research computational regulation on the MIT Media Lab (he didn’t participate within the analysis).

One thought that may assist keep away from degradation is to verify the mannequin provides extra weight to the unique human-generated knowledge. One other a part of Shumailov’s examine allowed future generations to pattern 10% of the unique knowledge set, which mitigated among the unfavorable results.

That might require making a path from the unique human-generated knowledge to additional generations, referred to as knowledge provenance.

However provenance requires some solution to filter the web into human-generated and AI-generated content material, which hasn’t been cracked but. Although a variety of instruments now exist that intention to find out whether or not textual content is AI-generated, they’re usually inaccurate.

“Sadly, we have now extra questions than solutions,” says Shumailov. “But it surely’s clear that it’s necessary to know the place your knowledge comes from and the way a lot you may belief it to seize a consultant pattern of the information you’re coping with.”